AllenNLP: reverse sequence example

The tutorial of joeynmt inspired me to replicate their tutorial using AllenNLP.

This post was tested with Python 3.7 and AllenNLP 0.8.4.

Data

Download the generator script from joeynmt

mkdir -p tools; cd tools wget https://raw.githubusercontent.com/joeynmt/joeynmt/master/scripts/generate_reverse_task.py cd ..

As prerequisite to run the script you should already have installed allennlp (for numpy).

For the seq2seq datareader of AllenNLP the data needs to be converted to tabulator separated csv.

paste should be part of every unix installation.

Configuration

This configuration is as close as possible to the one given by the joeynmt tutorial (reverse.yaml).

{ "dataset_reader": { "type": "seq2seq", "source_tokenizer": { "type": "word" }, "target_tokenizer": { "type": "word" } }, "train_data_path": "data/train.csv", "validation_data_path": "data/dev.csv", "test_data_path": "data/test.csv", "model": { "type": "simple_seq2seq", "max_decoding_steps": 30, "use_bleu": true, "beam_size": 10, "attention": { "type": "bilinear", "vector_dim": 128, "matrix_dim": 128 }, "source_embedder": { "tokens": { "type": "embedding", "embedding_dim": 16 }}, "encoder": { "type": "lstm", "input_size": 16, "hidden_size": 64, "bidirectional": true, "num_layers": 1, "dropout": 0.1 } }, "iterator": { "type": "bucket", "batch_size": 50, "sorting_keys": [["source_tokens", "num_tokens"]] }, "trainer": { "cuda_device": 0, "num_epochs": 100, "learning_rate_scheduler": { "type": "reduce_on_plateau", "factor": 0.5, "mode": "max", "patience": 5 }, "optimizer": { "lr": 0.001, "type": "adam" }, "num_serialized_models_to_keep": 2, "patience": 10 } }

Change cuda_device to -1 if you have no GPU.

How to train

How to predict one sequence

echo '{"source": "15 28 32 4", "target": "4 32 28 15"}' > reverse_example.json allennlp predict output/model.tar.gz reverse_example.json --predictor simple_seq2seq

Results in:

{ "class_log_probabilities": [-0.000591278076171875, -9.015453338623047, -9.495574951171875, -9.83004093170166, -10.022026062011719, -10.089068412780762, -10.098409652709961, -10.247438430786133, -10.416641235351562, -10.431619644165039], "predictions": [[24, 17, 22, 4, 3], [24, 16, 22, 4, 3], [24, 15, 22, 4, 3], [24, 35, 22, 4, 3], [24, 17, 6, 4, 3], [24, 17, 14, 4, 3], [24, 17, 22, 12, 3], [24, 17, 22, 10, 3], [24, 17, 22, 5, 3], [24, 11, 22, 4, 3]], "predicted_tokens": ["4", "32", "28", "15"] }

Some training insights

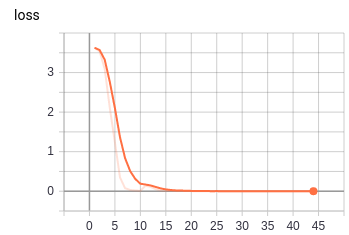

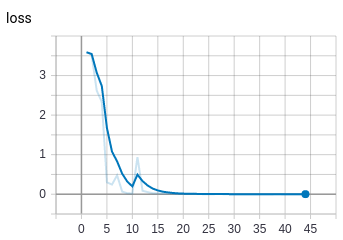

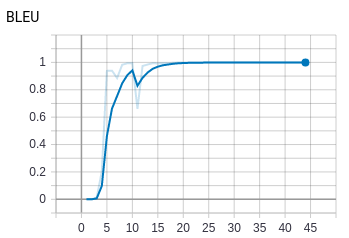

The plots (using tensorboard) showing expected training loss and validation loss. The BLEU score is nearly 1.0.

Training loss:

Validation loss:

BLEU:

Future work

The attention visualizations shown in the joeynmt tutorial are not yet implemented in AllenNLP. This will be a future blog post (hopefully).