I bought a Seeed XIAO BLE nRF52840 because the bluetooth part seems to be supported in Rust and Micropython, which is not the case for the Raspberry PI Pico yet.

First I tried to bring some Rust code on the XIAO nRF but I failed.

So MicroPython it is for now.

First step will be to get the LEDs on the board to blink.

Then a bit of Bluetooth exploration and finally connecting an OLED display.

Flashing Micropython on the XIAO nRF is the same as for any other Micropython supported microcontroller.

I used the already build version from https://micropython.org/download/SEEED_XIAO_NRF52/.

To flash on the microcontroller press the button while connecting to your PC and a filesystem will be shown.

Copy the .uf there and the device will reboot with the Micropython firmware.

The button is very small, so this part is a bit tricky.

I used rshell to get REPL on the XIAO nRF by typing repl as command when running rshell.

There are 2 LEDs on the board.

A green system LED, numer 1 in Micropython, and a 3-in-one LED, numbered 2 to 4 in Micropython.

The color numbers are 2 (red), 3 (green) and 4 (blue).

Use the LEDs:

from board import LED

LED(1).on()

LED(2).on()

LED(3).on()

LED(4).on()

# and to turn them off

LED(1).off()

LED(2).off()

LED(3).off()

LED(4).off()

LED 4 (blue) activated, all others off:

Next some Bluetooth exploration.

In the Micropython repository is an example how to scan for Bluetooth devices.

I couldn't find any documentation for the library used here, only the C code.

Scan for bluetooth devices:

import time

from ubluepy import Scanner, constants

for _ in range(10):

s = Scanner()

scan_res = s.scan(100)

for node in [i.getScanData() for i in scan_res if i]:

for entry in node:

if entry[0] == constants.ad_types.AD_TYPE_COMPLETE_LOCAL_NAME:

print(f"{entry[1]}:", entry[2])

time.sleep_ms(100)

When wearing a Wahoo TICKR heartrate sensor this returns (together with some other devices):

AD_TYPE_COMPLETE_LOCAL_NAME: bytearray(b'TICKR 3AB9')

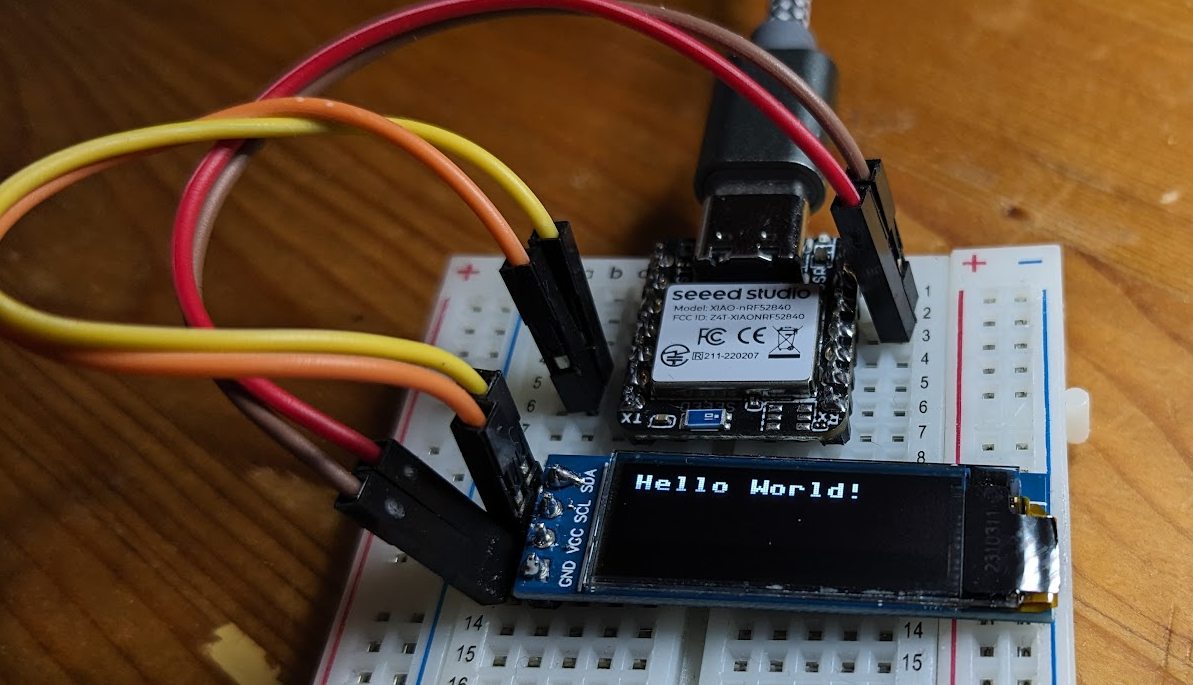

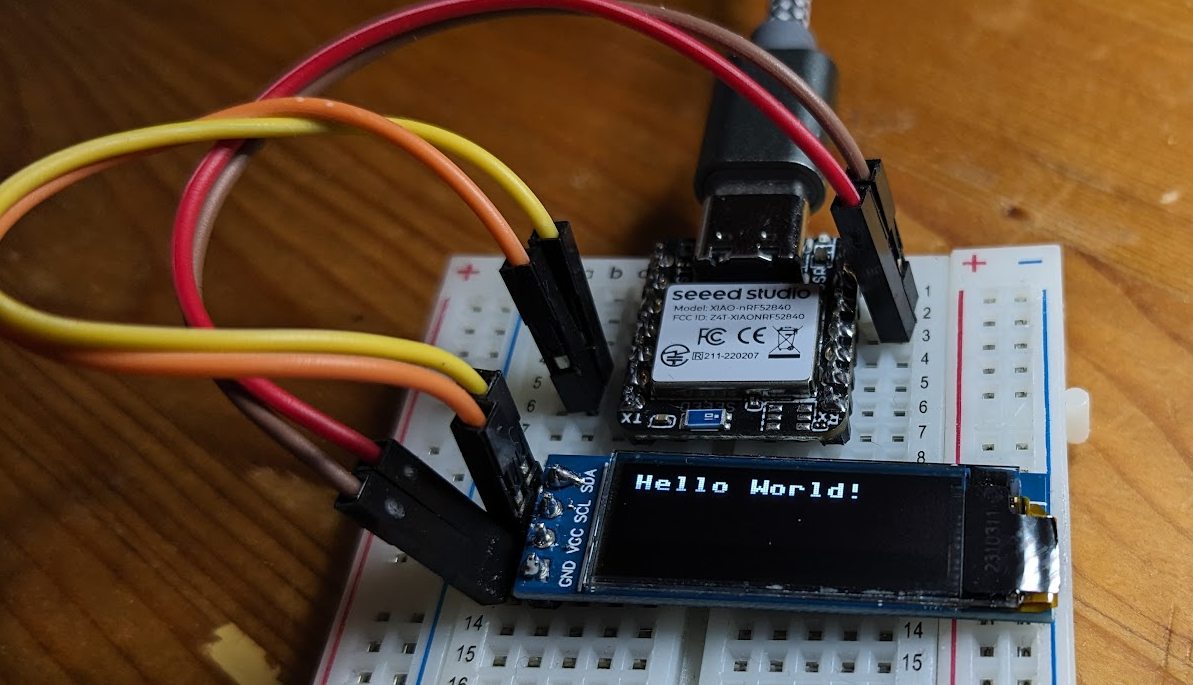

And finally the OLED display.

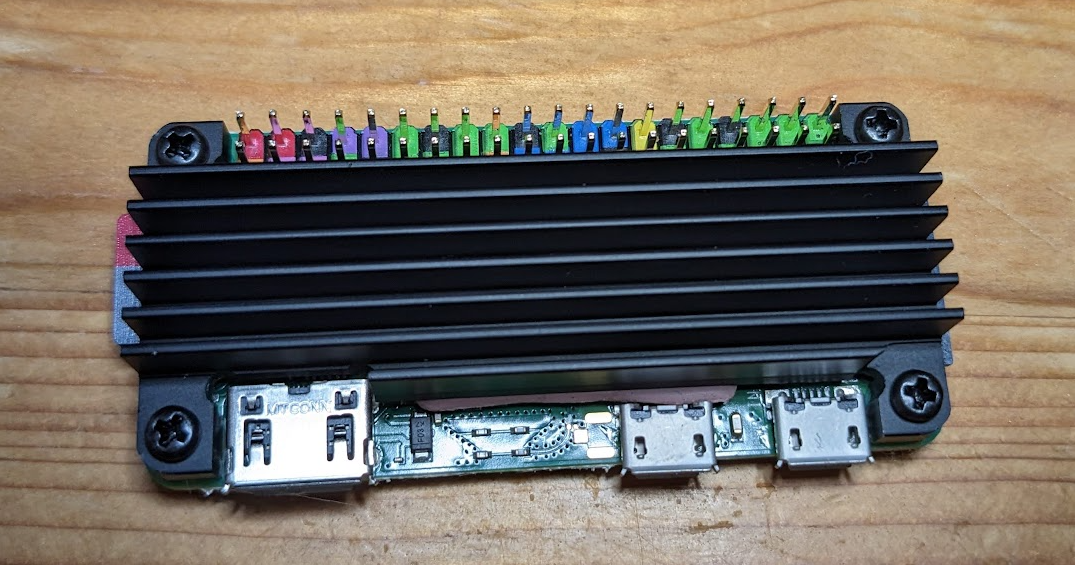

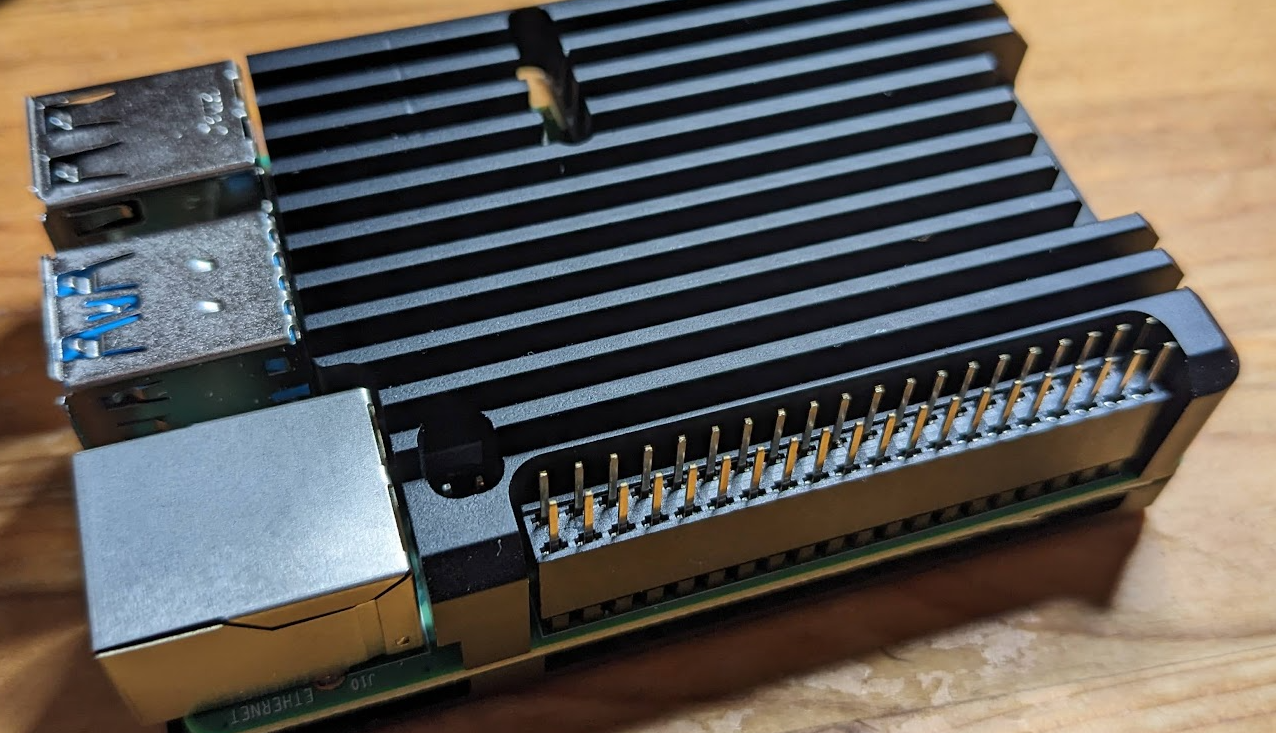

Before I could connect a display I had to solder pins on the XIAO.

For the display we need a Python module.

As in a previous post we will use the one from Micropython Github and copy it to the XIAO with cp ssd1306.py /flash.

This is a different path that for the Pico!

The Pins of the XIAO nRF are shown in the wiki from seeedstudio about the device.

The display I am using needs 3.3V, so I use the 3.3V pin.

The Python code to show a text on the display:

from machine import Pin, I2C

import ssd1306

i2c = I2C(0, sda=Pin.board.D4_A4, scl=Pin.board.D5_A5)

display = ssd1306.SSD1306_I2C(128, 32, i2c)

# clear display

display.fill(0)

# show a simple text

display.text('Hello World!', 0, 0, 1)

display.show()

Overall the nRF part in Micropython looks solid, but is not well documented.

I still plan on porting my Heartrate monitor display from Raspberry PI Zero to this Microcontroller for faster bootup time.