Process and Review thousands of Images

Six years ago I started to take a picture of a Minol heat usage meter every hour automatically with a Raspberry PI Zero. I wrote about the find and crop procedure and the processing of a folder back then. Additionally I build a prototype to recognize the digits on the display using a CNN, but I never finished and polished the code. This summer it feels like it is time to either automate everything or abandon the project.

Before I can retrain the CNN I need to preprocess all the photos the PI automatically collected over the years. Over 50k photos were shot and the first step is to see if the cropping is still working. Of course the position of the camera changed a bit every time I moved the couch it leans on, so I had to tweak the cropping code a bit.

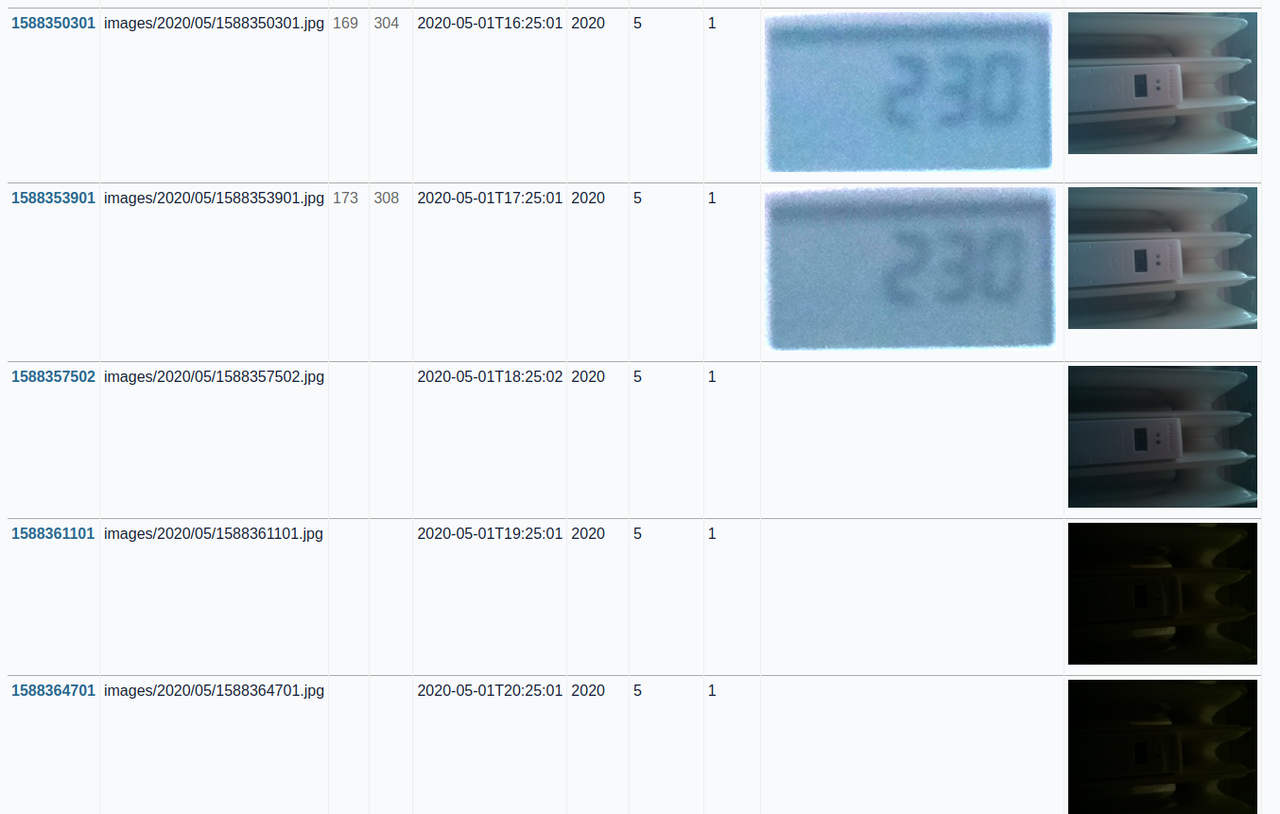

meta information about the image to filter and facet on

a thumbnail that can be displayed with datasette-render-images installed

the cropped and contrast enhanced display image

Rough unoptimized code that solves my current issue:

import datetime import io from pathlib import Path import cv2 from sqlite_utils.db import Database def find_and_crop(image): # cut center image = image[300:1500, 500:1800] # rotate (simplification; actual version has code to correct skewness) height, width, channels = image.shape center = (width / 2, height / 2) angle = 87 M = cv2.getRotationMatrix2D(center, angle, 1) image = cv2.warpAffine(image, M, (height, width)) # greyscale gray = cv2.cvtColor(image.copy(), cv2.COLOR_BGR2GRAY) gray = cv2.blur(gray, (11, 11)) thresh = cv2.threshold(gray, 70, 255, cv2.THRESH_BINARY)[1] contours, hierarchy = cv2.findContours(thresh, 1, 2) for cnt in contours: x, y, w, h = cv2.boundingRect(cnt) if w > 200 and w < 450 and h > 100 and h < 250: break else: # no rectangle was found return {} # crop image and increase brightness cropped = image[y : y + h, x : x + w] contrast = cv2.convertScaleAbs(cropped, alpha=3, beta=0) return { "h": h, "w": w, # imencode returns a tuple: is_success, image "display_image": io.BytesIO(cv2.imencode(".jpg", contrast)[1]).getbuffer(), } def thumbnail(image, width = 200): (h, w) = image.shape[:2] image = cv2.resize(image, (width, int(h * width / float(w))), interpolation=cv2.INTER_AREA) return io.BytesIO(cv2.imencode(".jpg", image)[1]).getbuffer() db = Database("minol.db") img_table = db["images"] img_table.create( { "id": str, "filename": str, "h": int, "w": int, "date": str, "year": int, "month": int, "day": int, "thumbnail": str, "display_image": str, }, pk="id", transform=True, ) for filename in Path("images").glob("202*/*/*jpg"): print(filename) ts = filename.stem dt = datetime.datetime.utcfromtimestamp(int(ts)) image = cv2.imread(str(filename)) img_table.upsert( { "id": ts, "filename": str(filename), "date": dt, "year": dt.year, "month": dt.month, "day": dt.day, "thumbnail": thumbnail(image), **find_and_crop(image), }, pk="id", )

The script is detecting the display on the photo, cropping the display and saving everything to an sqlite table.

Example from my actual table:

Photos shot at night are too dark to crop the display, so they have no display image. To get a consistent data curve later one image a day is enough, but because of the angle of the sun and reflections the best image can be at different times.